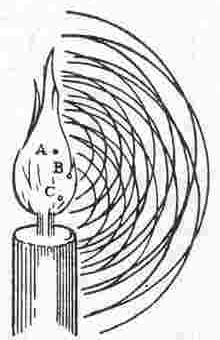

First of all what is coherence of light? If an extended source is radiating light, then in general every surface element of the source is radiating independently of every other. When the waves from the different bits of the source are added together to give the resultant field at some point, the resultant is a superposition of components with randomly different amplitudes, phases, and even - within limits - different frequencies. The result of this superposition of random contributions at some point P1 is unlikely to be the same as at some other point P2. What coherence theory aims to do it is to predict how similar or how different the resultant fields are at any two points P1 and P2. The extent of the area over which the fields have an appreciable resemblance to one another is called ‘the area of coherence’. To produce clear interference or diffraction effects we have to be working with light from points within a coherence area.

|

|

The ansatz encountered in so many undergraduate text books - "the light field can be described by E=E0cos(wt-kz)" - is clearly an unsatisfactory starting point for the treatment of real fields from real sources; but we usually reassure ourselves with the thought that we can surely superpose ensembles of such primitive representations by means of a Fourier integral. But can we, really? The earliest source I've encountered for a clear and purposeful statement that it is not satisfactory to construct a theory of optical processes in terms of elementary components whose amplitudes, wavelengths and phases are all unobservable was a paper published in Madagascar in (I think) 1934; the author's aim was to develop a description of light-fields in which the unobservable oscillations did not figure. Whether Wolf knew of this paper I don't know, but by the mid 1950s he was able to claim that the theory of partial coherence ... operates with quantities (namely with correlation functions and time-averaged intensities) that may in principle be obtained from experiment. This is in contrast with the elementary optical wave theory, where the basic quantity is not measurable because of the very great rapidity of optical vibrations.And from a different standpoint, which is not that of a book on classical physical optics such as Born and Wolf wrote, one can add that quantum theory tells us that we could not know the phase of the light field of ‘the elementary optical wave theory’ if we knew its intensity, whereas the observable functions of coherence theory don't run foul of quantum theoretic inhibitions. |

|

The coherence functions are statistical functions which specify the degree of correlation - or similarity - between the light fields at different points, or different times, or both. And there are four of them.

They are all formed by multiplying together one complex function - describing the field at one point/time - and the complex conjugate of another - describing the field at another point/time. If one contains the (unobservable) frequency w, and the other does too, then in one case this frequency appears in e-iwt, and in the other (complex conjugate) the frequency appears in e+iwt. When you multiply these together, the frequency disappears. If at P1 at a particular time the frequency of the field oscillation were w, and at the other point it had a slightly different value w' then in the multiplication we would get exp{-iwt} x exp{+iw't} = exp{i(w-w')t} which only contains the difference between the two frequencies. If the light has a narrow spectrum then w-w' is very much less than w; typically w and w' might be about 1015 per second, and w-w' is approximately equal to 109 per second; variations at that rate can be detected photo-electrically - I think this was probably first shown in the 1930s in Glasgow by John Thomson - and coherence functions described only these relatively slow and observable variations. The measurable functions of the classical coherence theory are then the mutual coherence mutual intensity auto-correlation and the intensity. It turns out that if you know the mutual intensity across the whole of any surface through which the light passes, you can predict it across any other surface through which the light passes; and from this it follows that if you know the mutual intensity across any surface you can calculate the intensity every where else. The coherence functions can be pictured as propagating through space, and the propagation can be described either by a differential wave-equation (in six or seven variables), or by an integral which sums - on the second surface - the secondary waves (shades of Huygens and Fresnel!) from the first surface. And if the first surface is positioned right up against the source, then you have the solution of Verdet's problem of calculating the coherence of the radiated light from any specified source. |